Why do lab results never live up to simulations when determining the capacity of optical fibre? That’s the question asked by Dr Lidia Galdino from University College London’s department of Electronic and Electrical Engineering. She was surprised to discover that the transceivers used to transmit and receive optical signals have more of an impact on system performance than previously thought.

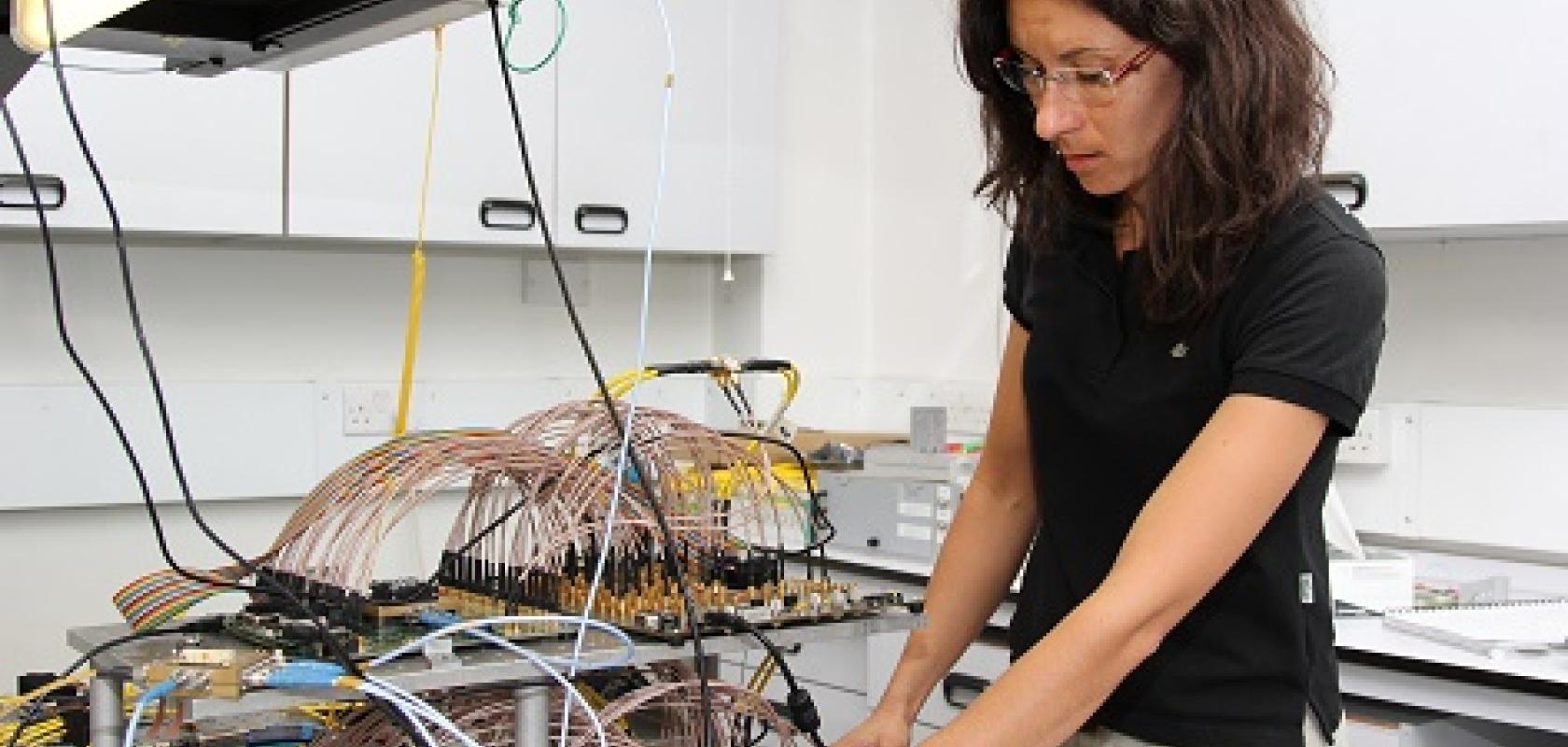

Working as part of the UNLOC research project, Dr Galdino’s research involves running experiments to find out how to maximise the capacity of optical fibre communications systems. These experiments test new techniques for compensating for nonlinearities, which ultimately limit the capacity of data transmission over standard optical fibre. A particular focus of the UCL team is a technique known as digital back-propagation (DBP). DBP detects the important properties of the light – amplitude and phase – and then digitally reverses the light’s journey to cancel out known distortions.

In labs around the world, researchers have consistently found that theoretical results greatly overestimate the gains they can achieve compared to practical experiments. Dr Galdino wanted to determine the cause of these discrepancies; essentially, what was everyone doing wrong?

Until now, researchers thought that the random rotation of light polarization in fibre known as polarisation mode dispersion (PMD), which cannot be compensated by DBP, was the main reason lab results didn’t return the gains they had hoped for. However, despite this observed degradation in nonlinearity compensation due to PMD, theory still substantially overestimated the gains measured in experiments.

This – it turns out – is because simulations assume ideal transceivers and, consequently, overestimate the performance of nonlinearity compensation techniques.

Realising this, Dr Galdino and her colleagues entered the specific parameters for their transceiver into a simulation. When the results from the lab matched the theory, they confirmed that the issue must be with transceiver noise.

“This is hugely significant for the design of fibre infrastructure. It is now possible to identify all sources of error in system performance and, therefore, to find techniques to mitigate them,” she said.

In a paper published in Optics Express, Dr Galdino demonstrates for the first time that transceiver noise interferes nonlinearly with the signal, meaning it doesn’t increase proportionately as signal power is increased. Before now, researchers underestimated the impact of transceiver noise by thinking that the interference it caused increased at a steady rate as signal power increases.

“We’ve proposed a new approach that correctly accounts for the nonlinear interference between the transceiver noise and signal in an optical fibre link. For the first time, every system designer can easily predict their transmission system performance in seconds,” said PhD student Daniel Semrau, co-author of the study.

The noise produced by the transceiver comes from digital-to-analogue and analogue-to-digital converters in the transmitter and receiver respectively. This fundamental noise is present even in our state-of-the-art equipment, but in time manufacturers will be able to improve on this. The UNLOC research will help systems designers to correctly predict and design next-generation, high-performance optical transmission systems, says Galdino.

“We should consider transceiver noise as a more fundamental limit to performance than PMD,” said Dr Galdino. “Importantly we can now precisely estimate the achievable gains by applying DBP in realistic optical systems. We’ve been doing all the right things and DBP is still one of the most powerful ways to maximise capacity or increase transmission distances.”