Geoff Bennett outlines three myths surrounding optical capacity, and how they can be dispelled

Subsea cable construction is experiencing a new golden age. We’ve seen 13 new cable systems built with a cumulative value of more than $2.4 billion since 2016, and more than $1.5 billion has been invested in new Latin American cables alone in 2017, according to market research firm TeleGeography.

These new systems have been explicitly designed for the latest generation of coherent transmission, and numerous articles have explored this technology in depth. Here, I will explain how the most effective enhancements in cable capacity can be achieved, and try to dispel three commonly held capacity myths in the process.

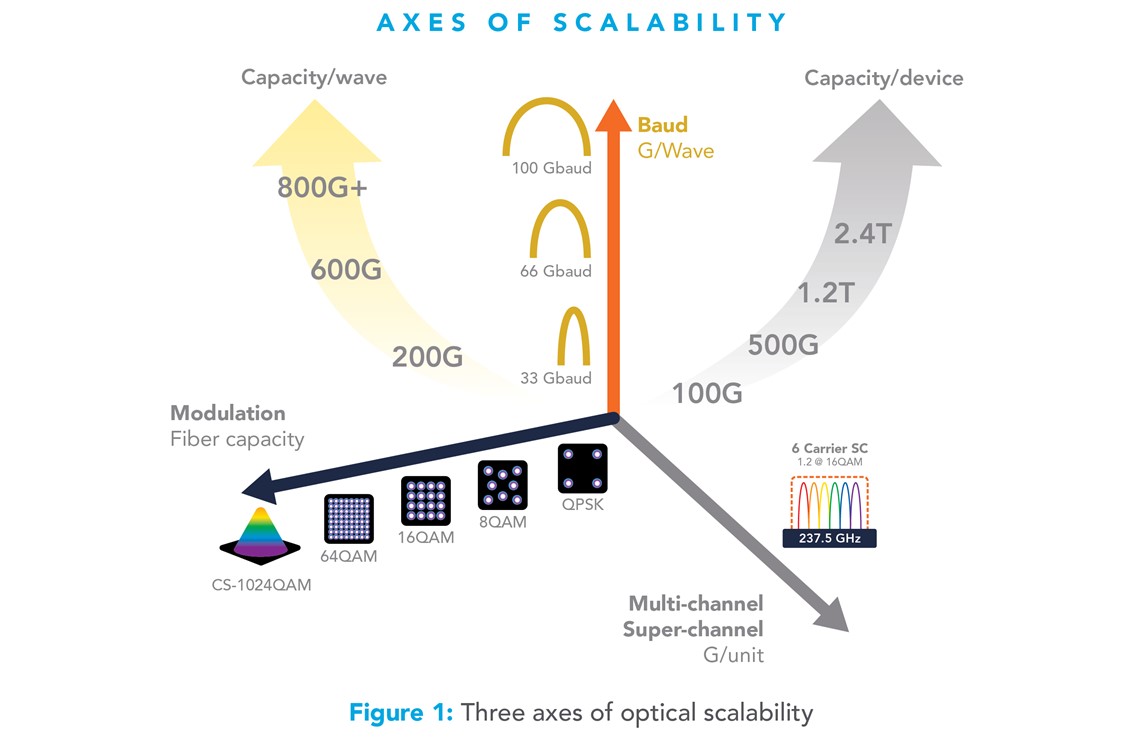

There are three axes needed for optical scaling – baud rate, modulation order and integration. In simple terms, we can think of scaling in two ways: the capacity per line card or appliance and the total capacity that can be achieved on the fibre. If we scale one, does that mean we also scale the other? Not always.

Let’s take the most common coherent optical transponder form factor, which is a 100Gb/s transponder. How can we scale this form factor to increase the capacity per line card? Note that this is a key metric for optical network operators because the higher the line card capacity, the more ‘productive’ an engineer will be when they bring new capacity into service.

Myth #1: increasing baud rate increases fibre capacity

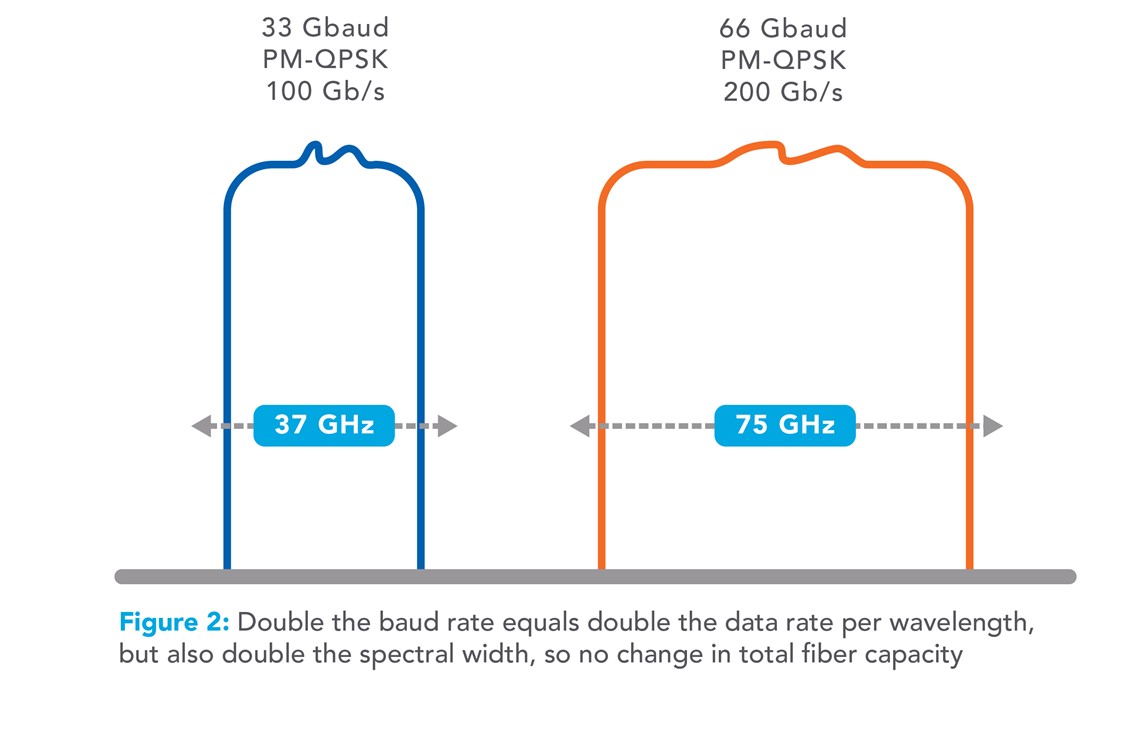

A 100Gb/s transponder typically operates at 33GBd, with each symbol carrying four bits using polarisation-multiplexed (PM) quadrature phase-shift keying (QPSK) modulation. Increasing only the baud rate to 66GBd doubles the wavelength data rate to 200Gb/s. At the same time, there is typically a small reduction in reach – perhaps by 10 to 15 per cent, for reasons I explain below. However, the total fibre capacity will not change when the baud rate alone is increased – the explanation for this is shown in Figure 1.

On the left is a 33GBd, 100Gb/s wavelength, which occupies roughly 37GHz of optical spectrum when using the latest Nyquist pulse shaping. On the right is a 66GBd, 200Gb/s wavelength, which occupies exactly double the optical spectrum at about 75GHz. So the capacity per GHz is unchanged, which means that the capacity per fibre is also unchanged. As I mentioned already, increasing the baud rate makes the installation engineer more productive – it tends to reduce the cost per bit of hardware, and makes the capacity per chassis so much higher. All these are extremely valuable enhancements, but there is no improvement in total fibre capacity.

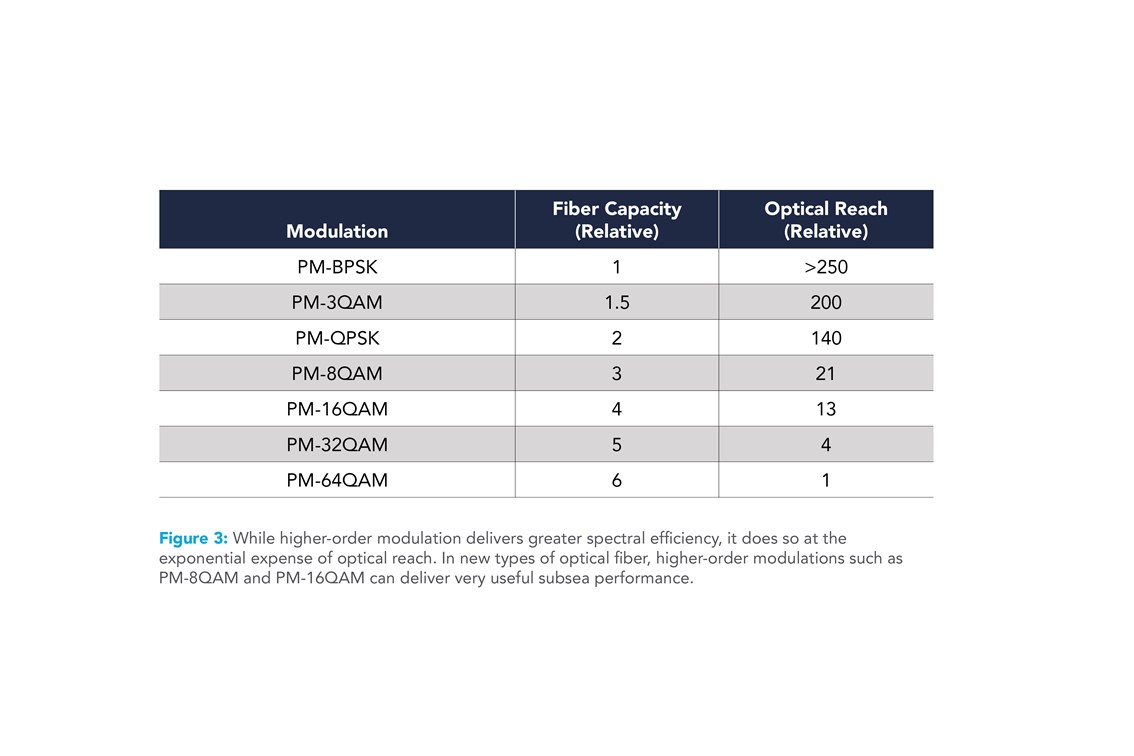

Myth #2: increasing modulation order increases subsea fibre capacity

I should preface my explanation of this myth by saying that it is partially true. If we hold baud rate constant at 33GBd, but move from PM-QPSK to PM-16 quadrature amplitude modulation (QAM), then the transponder data rate increases from 100Gb/s to 200Gb/s, and the spectral width stays at about 37GHz. So the spectral efficiency doubles… but there is also bad news.

As we increase modulation order, the optical reach drops exponentially, because of the higher non-linear penalty for higher-order modulation symbols. This can be mitigated by the new large mode area fibre types being used in new subsea cable systems, which is why a modern trans-Pacific link could potentially be closed using PM8QAM, and a modern trans-Atlantic link using PM-16QAM.

Myth #3: PIC-based superchannels have the same spectral efficiency as discrete transponders

The third axis of scaling is the process of integrating multiple wavelengths onto a single line card or appliance using ‘large-scale’ (i.e. multi-wavelength) photonic integrated circuits (PICs). PIC-based line cards and appliances have all the optical components for multiple wavelengths integrated onto a single pair of optical chips – one for transmit and one for receive – allowing a linear increase in line card or appliance capacity and no corresponding optical reach penalty. There are, for example, PIC-based 1.2 terabits per second (Tb/s) and 2.4 Tb/s appliances on the market today.

The set of wavelengths that emerges from the line card or appliance is known as a superchannel. The third myth assumes that a PIC-based super-channel has the same spectral efficiency as if you had created this super-channel from multiple coherent transponders (something that is perfectly possible). Recently, multiple subsea field trials and commercial deployments have proven this myth to be false. In fact, a PIC-based super-channel is more spectrally efficient – one of the main reasons is shown in Figure 2.

On the left we see the Nyquistshaped wavelengths from six 200Gb/s transponders. Nyquist shaping means that these can be closely spaced on the fibre for enhanced spectral efficiency. However, the wavelength-locking circuits for each wavelength operate independently, so safe minimum spacing is needed to ensure that adjacent channels do not interfere with each other. If this were an animated diagram, you would see the individual peaks drifting independently left and right of their nominal centre point, so they need to be spaced accordingly. In contrast, on the right, the set of six waves from a single PIC use a single wavelength-locking circuit, so any drift is actually correlated across the comb of waves.

Two more tricks in the toolkit

While PIC-based channel spacing is a big advantage, in terms of spectral efficiency, there are some other tools that can be used to enhance optical reach, spectral efficiency or both. They are Nyquist subcarriers, which can also be used on singlewavelength transponders, and soft-decision (SD) forward error correction (FEC), which requires at least two wavelengths being processed by the same chip, as is the case in a PIC-based implementation.

One of the hottest optical technologies being discussed at conferences at the moment is the idea of Nyquist subcarriers. With this approach, the transmitter in the coherent implementation uses sophisticated signal processing to split the light from the laser into multiple subcarriers. This allows each subcarrier to be modulated independently – for example, in a seemingly 66GBd signal with six subcarriers, each would actually be modulated at about 11GBd.

The key point is that the electronics industry finds it much ©shutterstock Figure 1 easier to deliver processing power in parallel, rather than in series, so subcarriers provide an ideal way to ‘go parallel’ and deliver more potential coherent processing power for impairment compensation.

The final capability in the toolbox makes direct use of multiwavelength implementations in which the data streams from two or more wavelengths are passed through the same FEC decoder. The principle is shown in Figure 3. In this case there are two wavelengths, blue and red, and let’s assume the objective is to bring both into service using PM-8QAM modulation. The blue wavelength is in a benign part of the fibre spectrum, which typically has high chromatic dispersion, while the red is in a low-dispersion part of the spectrum, exposed to a more hostile set of impairments.

In a traditional implementation, the two signals would be processed by independent FEC engines, so the additional FEC margin that the blue wavelength enjoys cannot be shared with the red wavelength. Since the red wavelength is below the PM8QAM commissioning limit, the only option would be to dial this down to PM-QPSK modulation, at a loss of 50 per cent of the capacity.

In a PIC-based multi-wavelength implementation, the two signals are processed by the same FEC engine. This allows the margin to be shared, which effectively ‘pulls up’ the red wavelength using the excess FEC margin from the blue wavelength, so both are now above the 8QAM commissioning limit. Thus, SD-FEC gain sharing can achieve higher capacity over a longer distance, or avoid compromising capacity for distance.

Proving the point

In a subsea network, impressive PowerPoint claims do not deliver a competitive advantage, so the field trial is the ultimate proof point. Since September 2017, when we partnered with Seaborn Networks to demonstrate a record-breaking 18.2Tb/s capacity over the Seabras-1 cable, other field trials have shown between a 30 and 50 per cent improvement in capacity using the same technology. The latest of these field trials again breaks a significant record – delivering more than 20Tb/s of capacity over a trans-Atlantic link using production equipment, with commercial transmission margins. Our three myths are well and truly busted… and subsea operators need all the advanced tools at their disposal to squeeze capacity from the sands of an optical fibre.

Geoff Bennett is director of solutions and technology at Infinera