Dr Danish Rafique offers a strategic blueprint for the optical networking sector

Whatever buzzword you use to describe it, analytics is today’s frontline trend. It seems to be supported by continual product announcements from countless vendors; it promises the world for almost everything; and indeed, it does seem cool. e missing piece, however, is how exactly it’s going to help any particular industry.

There’s no sector-specific blueprint which can be treated as a standard operating procedure. Talking to consultants, attending tradeshows, surfing your way through tons of online resources will make you superficially smart, but you don’t know what you don’t know. What’s specifically different than legacy solutions? What does your particular business have to gain from it? What sort of skillsets do you need? What are the infrastructure requirements?

And it’s not only a concrete technological strategy and roadmap that are missing; medium to long-term business objectives remain extremely vague, making it hard to see beyond the hype in several industries.

However, unlike many artificial-intelligence-based technologies limited to the sci-fi world, analytics – and in particular machine learning – is a reality at this point in time. As such, it’s at best a waste and at worst reckless for the optical networking markets to miss out on what these emerging technologies could offer.

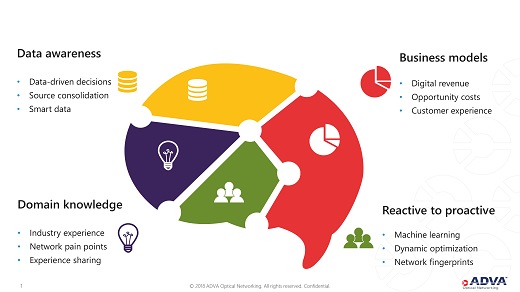

Let’s explore four cornerstones of enabling analytics for the optical networking industry.

Domain knowledge

First things first: the world of analytics needs to be built around solid domain-specific knowledge. It’s quite easy to get carried away with the gleaming promises of the massive toolset and solution space of the analytics ecosystem. In reality, there exists no magical tool or set of frameworks that can seamlessly integrate with standing networking product portfolios.

What’s needed is for the experts in optical network design, operation and management to carefully analyse areas and problems where analytics could be helpful, and then make informed decisions in the context of available technologies. These experts are the secret sauce behind network digitisation, as they not only know how optical transmission systems work, but are familiar with operational processes and needs – from vendor and consumer viewpoints.

These experts need to determine network pain points, possible solution spaces and design considerations, and consequently replace the conventional reactive online approaches with advanced machine learning strategies and frameworks. While specific expertise in statistics, mathematics and machine learning will surely be useful, it’s neither optimal nor likely to achieve the same goals as the domain experts. It’s quite obvious that people who have network and user knowhow will need to substantially augment their knowledge with software architecture definition and implementation; it’s this killer combination which will drive analytics into the optical networking sector, with these experts placed at the core of this digital network ecosystem.

Data awareness

Optical networks generate a plethora of data with extremely high granularity, including, operational, asset, infrastructure, process and planning data pools. However, as there are typically no frameworks in place to systematically access, extract, store and analyse this data, this treasure-trove remains largely untapped. While organisation-locked management tools are available, and used today, they are both restrictive in their capabilities and generally non-integrable with open platforms, essentially rendering them impractical for scaled optical networking. What’s required is, in fact, a cultural shift in the business, making data-driven decisions part of the organisation’s core.

On one hand, storage, data source integration and access is important, the issue, however, is that network data is high dimensional, varying at each level of system hierarchy. Indeed, tools are available to process massive datasets, but they are expensive, even charging on per-data transactions or data-refresh rates – a business model the optical industry is not familiar, or comfortable, with.

Network topologies, performance metrics, and inventories must be synchronised to get a bird’s eye view of how the various devices and facilities function, and which features are most relevant. The decisive criterion here isn’t necessarily the amount of data, but its value in terms of significance, quality, etc.

In a large meshed network, several network nodes support petabytes of traffic, generating massive data pool, including number of flows, error rates, retransmit rates, capacity, optical power levels, accumulated distortions and configurations, to name a few. It’s only if domain experts can narrow this down to reflect abstract network behaviour, that it becomes clear how to adequately process and analyse this data: see how this data awareness is tied to the domain knowledge. Consequently, the focus needs to shift from big data to smart data collection and processing, serving as the key market differentiator going forward.

Reactive to proactive

Planning, operating, and managing optical networks is hard. Typically, pre-determined thresholds and heuristics are deeply integrated within several network functions, not only necessitating extremely careful verification tests and continuous maintenance, but also non-optimal utilisation of valuable network resources. This approach is inherently reactive, where actions are taken after an event, leading to unwanted changes in network conditions or even downtime. The key issue to understand here is that networks are non-stationary, and deterministic rules for operating any nonstationary system can’t guarantee optimality.

More importantly, the performance of the models is limited by the skill and experience of the designer. Luckily, this is territory for the application of machine learning frameworks, learning from past behaviour and translating the data lake into actionable insight. In particular, precise estimates of how a network is behaving at a given time offer enormous optimisation benefits. Furthermore, predictive capabilities would allow better use of network resources, reducing the CAPEX and improving user experience.

Last, but not least, prescribing user actions based on real-time network status could revolutionise the service and support delivery. The overall strategy should be to create unique network operational patterns, effectively creating a knowledge pool, which may then be exploited across the end-to-end network.

Business models

While the technology marketplace is bustling, we mustn’t forget that discussion on analytics should be about business goals, rather than available technologies. Not many solution providers can make the impact of diverse analytics solutions on business operations clear to the client. The promises of cloud-based hosting and support of various machine learning tools isn’t enough. On the flipside, network vendors need to focus on opportunity costs and not get bogged down by direct costs of deploying analytics solutions.

Three key digital industry drivers are: new digital revenue streams; continuous revenue based on product-based service mind-set; and revenue proportionate to customer base. The first waves of analytics could disrupt optical network optimisation and operational management, revolutionising the industry from network design principles to customer experience. And it’s the senior leadership and strategists facing the biggest initial challenges, as analytics-driven solutions may not match conventional wisdom or gut feelings. A certain level of scepticism may be necessary to carefully draw cognition into networks, together with an open mind towards alternates.

Regardless of the approach, optical networking businesses should prepare themselves for a global analytics-driven product and portfolio strategy, rather than chunks here and there. Despite the potential lead time, the time to act is now.

Dr Danish Rafique is senior innovation manager at ADVA Optical Networking